If you try to feed a baby a T-bone steak, it will die of starvation.

Steven

It’s a simple concept I reinforce to my team. In the consulting field, you must meet your customers where they are. A lot of time and energy has been put into the NIST AI Risk Management Framework (RMF). But with all this time and energy, did the NIST AI Risk Management Framework meet customers where they were? No, the NIST AI Risk Management Framework alone won’t help most companies meaningfully improve their AI governance programs. However, I’m only particularly eager to point out a problem if I offer a solution. At the end of this article, we’ll point you to additional resources to help you develop your AI governance program.

Over the last year, I’ve spent roughly 1000 hours learning about AI and AI governance. It’s a far cry from 10,000 hours to be an expert, but it’s a start. In full transparency, I stopped at the details of the chain rule in calculus and how it works in backpropagation. Still, I can explain how the concept works. I have developed and trained models from scratch using PyTorch. I’ve spent countless hours reading academic research papers. And I’m fortunate enough to work with a fantastic data science team building and implementing AI systems. Huge shout-out to Ben Prescott for spending what must have been dozens of hours with me on this topic and for sharing his concerns about real-world AI implementation. After all this research and after working on some real-world projects with customers on this topic, I wanted to share what I’ve learned with the community.

“I believe the NIST AI RMF will not help AI governance; it may make it worse.”

I know this is a bold claim, but there’s an evidence-based reason for this statement. To see if this claim holds water, let’s review the intended purpose of the NIST AI RMF. The purpose of the NIST AI RMF is, in its own words:

“In collaboration with the private and public sectors, NIST has developed a framework to better manage risks to individuals, organizations, and society associated with artificial intelligence (AI).”

If we measure this Framework’s success, we need to assess if it achieves its goals.

NIST AI RMF Documents Overview

As we go through this article, I’ll provide you with page counts of the documents I reference. We’ll tally them at the end of the article. You’ll understand why this is meaningful later.

Most individuals will likely start with two documents when adopting the NIST AI RFM: the NIST AI 100-1 document (This is the NIST AI RMF proper) and the NIST AI RMF Playbook. The NIST AI 100-1 document is 48 pages, and the AI RMF Playbook is 210. If you’re new to this space, I recommend reading the AI RMF Playbook before the NIST AI 100-1 document. For most individuals, the Playbook provides context that will be required to interpret the requirements in the Risk Management Framework.

If you’re new to AI and unfamiliar with its terms, you will encounter it in the NIST AI RMF. Another document for you is The Language of Trustworthy AI: An In-Depth Glossary of Terms. The abstract will redirect you to NIST’s website, the Trustworthy & Responsible AI Resource Center. You will find a Google doc with the current terms and definitions. Suppose you’re unfamiliar with terms like “anthropomorphism” and “automation bias.” In that case, you will at least need to reference the document while reading the NIST AI RMF and the accompanying AI RMF Playbook. At the time of this writing, there were 514 unique definitions in The Language of Trustworthy AI document.

Now that we’re set up for success, let’s get into the AI RMF content and construction.

NIST AI RMF Content

NIST AI RMF Audience

Let’s evaluate who the NIST AI RMF is for and its audience. NIST maintains a section of its website titled Trustworthy & Responsible AI Resource Center. The audience page located there describes the intended reader.

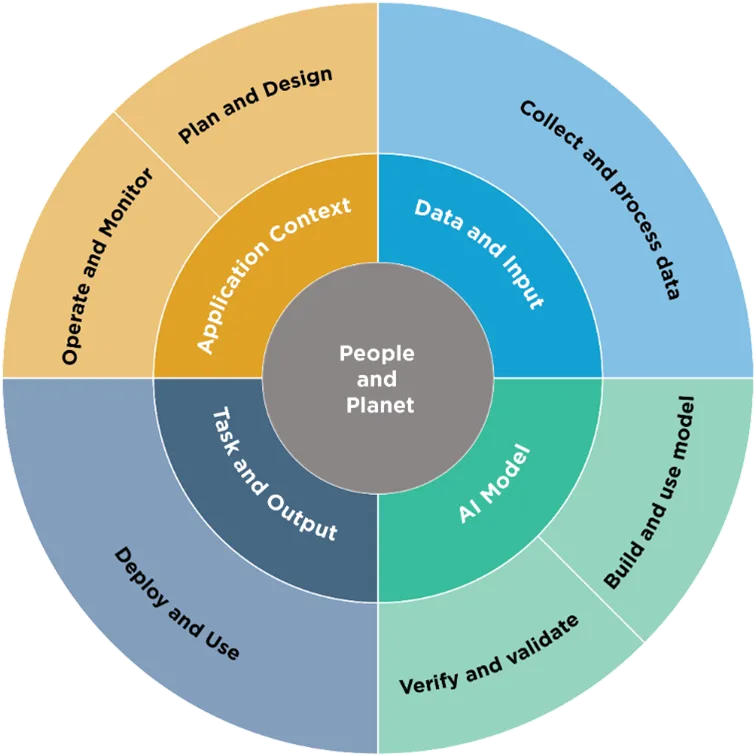

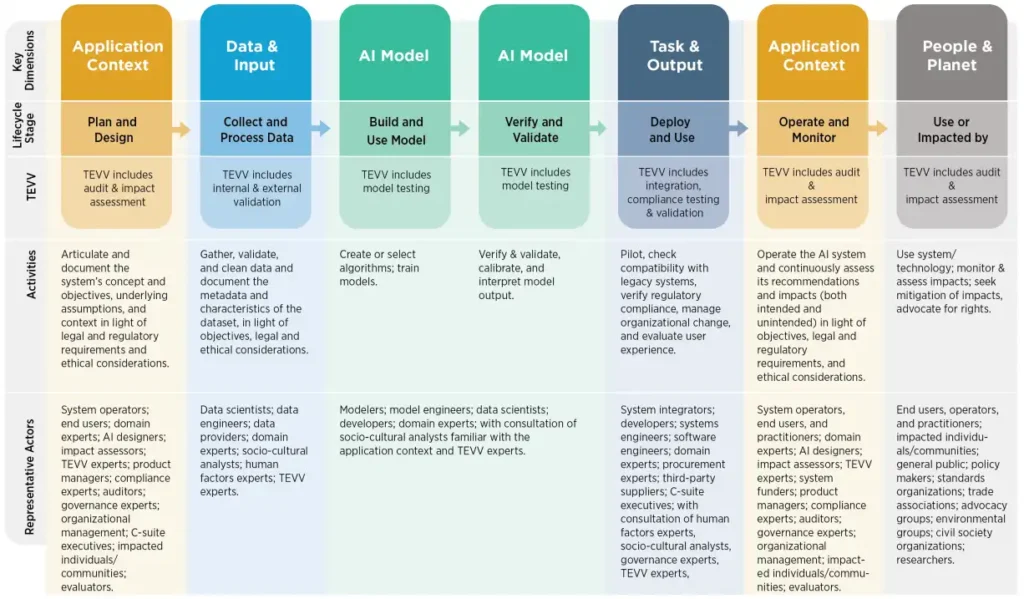

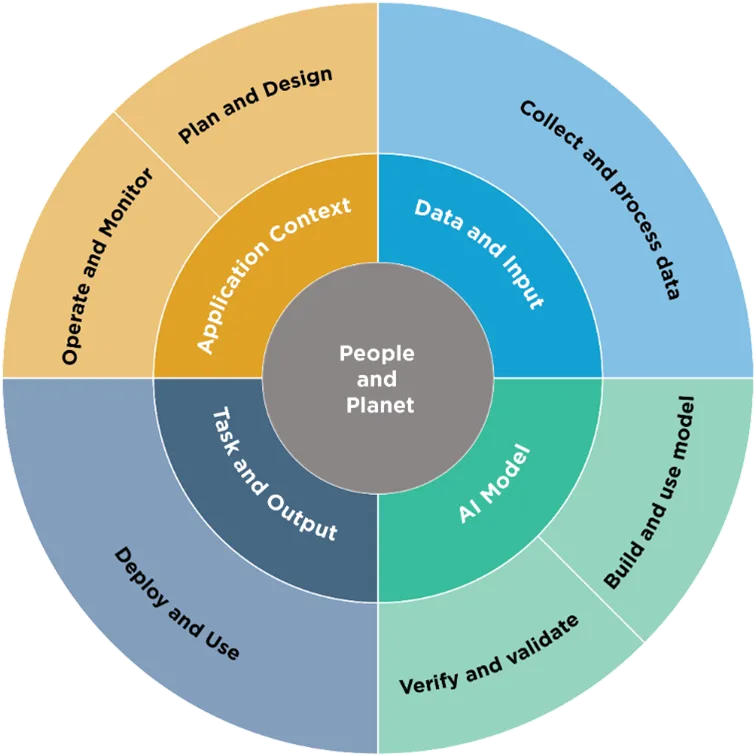

The graphics produced by NIST are helpful; they provide excellent visualization of AI lifecycle activities. Most organizations will have no role in developing AI models. However, they will play a part in the data and input dimension, specifically for fine-tuning or retrieval augmented generation. Many organizations are scrambling to understand the Application Context dimension, and ultimately, they will Deploy and Use AI systems.

This breakdown of the AI lifecycle and its crucial dimension is terrific. However, the NIST AI RMF doesn’t expressly state which of the 72 NIST AI RMF controls are designed to govern what phase of the AI lifecycle. This will inevitably lead to overzealous governance “experts” and consultants trying to enforce control objectives where they don’t belong. For example, in the Govern 5.1 subcategory, there are elements of environmental impact assessments that read:

Verify that stakeholder feedback is considered and addressed, including environmental concerns, and across the entire population of intended users, including historically excluded populations, people with disabilities, older people, and those with limited access to the internet and other basic technologies.

NIST AI Risk Management Framework

This control objective is clearly targeted at the AI Model dimension. However, armies of AI consultants will tell you your organization must understand the environmental impact of your AI systems. And it just so happens those consultants can sell you those services for $500 an hour…

A more detailed representation of AI workflow on page 16 of the AI RMF provides expanded activities and who should be involved. However, this section doesn’t guide which AI development lifecycle steps belong to which AI Actors. Roughly speaking, an AI Actor is anyone involved in or impacted by the AI system.

AI RMF Core

If you haven’t read the NIST AI RMF, the core of the framework is broken down into four functions:

- Govern

- Map

- Measure and

- Manage

The function concept should be familiar to those already using the NIST CSF. Each of the 4 functions has anywhere from 4 to 6 categories with corresponding subcategories. 72 subcategories within the AI RMF provide governance objectives. The NIST AI RMF recommends that organizations develop policy statements to guide their AI governance programs. Most AI governance policy statements will be derived from the Govern function. Some applicable guidance in the Map function will apply to policy, and the Measure and Manage mainly ensures that Goven and Map actions are carried out.

Follow my recommendation and read the NIST AI RMF Playbook first. You will find beneficial information in every subcategory. The Playbook is an excellent document, and if you’re beginning your journey into AI governance, I recommend you find an ultra-wide monitor and have these two documents open side by side so that you can go line by line in the NIST AI RMF (NIST AI 100-1) and reference the NIST AI RMF Playbook as you’re going through the content. You can reference the AL Glossary of Terms if you see an AI term you’re unfamiliar with.

AI RMF Profiles

The AI RMF highlights the need for “profiles” with practical situations where the RMF could be applied. However, helping the reader understand how to use these general concepts needs to be covered. What would have been helpful is a reference to NIST document SP 1270 Towards a Standard for Identifying and Managing Bias in Artificial Intelligence. This document outlines concepts and details of how bias at a personal and systematic level needs to be accounted for in an AI system. Because AI systems are trained on historical data, the models themselves will reflect bigoted or biased trends in the past. The Standard for Identifying and Managing Bias in Artificial Intelligence is 86 pages. To the AI RMF document’s credit, The Standard for Identifying and Managing Bias in Artificial Intelligence is referenced in a “more information” reference.

NIST AI RMF Language

AI Risks and Trustworthiness

If AI systems are going to drive cars, make medical diagnoses, or run future nuclear fusion reactors, Trust is a requirement. These tasks are things that AIs are being developed to do right now. In section 3 of the AI RMF document, NIST highlights a fundamental tenant of an AI system: Trustworthiness. They make the statement:

For AI systems to be trustworthy, they often need to be responsive to a multiplicity of criteria that are of value to interested parties. Approaches which enhance AI trustworthiness can reduce negative AI risks. This Framework articulates the following characteristics of trustworthy AI and offers guidance for addressing them. Characteristics of trustworthy AI systems include: valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair with harmful bias managed.

NIST

NIST hit the nail on the head; AI will change the world in ways we don’t understand. Surely, NIST wouldn’t take a word like “trust” and complicate it. Would they? NIST commissioned UC Berkeley to produce a document defining what Trust means for AI systems, A Taxonomy of Trustworthiness of Artificial Intelligence. This document is 78 pages long with 30 pages of questions. These questions map back to the NIST RMF Framework to help readers understand if their AI system is “trustworthy”. If you’re looking for the questions, they start on page 22 of the document. What’s frustrating is that the NIST AI RMF does not reference the Taxonomy of Trustworthiness of Artificial Intelligence document. So, a critical term within the NIST AI RMF, “trustworthiness”, is left open to interpretation. Interpretation leads to inconsistencies in risk evaluation, undoubtedly impacting unsuspecting communities.

Explainable and Interpretable

Two additional fundamental concepts within the NIST AI RMF are explainability and interpretability. These concepts seem similar but are different in meaningful ways. While the NIST AI RMF does an inferior job explaining this concept, it does reference another document: Psychological Foundations of Explainability and Interpretability in Artificial Intelligence. This is an outstanding document, at 56 pages.

Interpretation refers to a human’s ability to make sense, or derive meaning, from a given stimulus.” On the other hand, explainability is “an explanation of a model result is a description of how a model’s outcome came to be.

NIST

Section 1.2.2 of the Psychological Foundations of Explainability and Interpretability in Artificial Intelligence provides a medical diagnosis as an example. The medical example offers a series of data points an AI may use to decide that a patient’s symptoms are likely a viral infection and do not warrant antibiotics. This would be an explainable answer. On the other hand, the doctor may take the reasons for the AI model’s answer and interpret that the patient should still receive antibiotics because they are especially susceptible to bacterial infections. In the second case, a doctor has subject matter knowledge to make a better, more informed decision.

Explainability is critical for AI system development, especially when making decisions about loan processing. Section 2.1 of the paper Psychological Foundations highlights research that demonstrates people prefer the least precise representation of data where they can still make a meaningful decision. We’ll save this critically important point for later. Write it on a piece of paper to look at later, it’s important.

Addressing Bias and Solutions

Although the NIST AI RMF spends significant time discussing bias, it gives a compelling reason why tackling bias in the AI system will be challenging. The concept NIST AI RMF addressed the idea of “fairness” in this way:

Fairness in AI includes concerns for equality and equity by addressing issues such as harmful bias and discrimination. Standards of fairness can be complex and difficult to define because perceptions of fairness differ among cultures and may shift depending on application… these (bias) can occur in the absence of prejudice, partiality, or discriminatory intent.

NIST

While I’ll give the NIST AI RMF a gold star trying to address this, honest guidance is found in another supplemental document: Towards a Standard for Identifying and Managing Bias in Artificial Intelligence. The management bias document consists of 86 pages of material and covers bias types, including:

- Statistical / computational biases

- Human biases

- And systemic biases

Section 3.1.1 in the Standard for Identifying Bias document outlines some inherent problems with Large Language Models requiring too much training data. The document says it best:

AI design and development practices rely on large scale datasets to drive ML processes. This ever-present need can lead researchers, developers, and practitioners to first ‘go where the data is,’ and adapt their questions accordingly. This creates a culture focused more on which datasets are available or accessible, rather than what dataset might be most suitable…

NIST

The Standard for Identifying and Managing Bias in AI publication proposes high-level solutions for managing bias in the AI development life cycle. These include:

- Pre-processing: transforming the data so that the underlying discrimination is mitigated. This method can be used if a modeling pipeline is allowed to modify the training data.

- In-processing: techniques that modify the algorithms in order to mitigate bias during model training. Model training processes could incorporate changes to the objective (cost) function or impose a new optimization constraint.

- Post-processing: typically performed with the help of a holdout dataset (data not used in the training of the model). Here, the learned model is treated as a black box and its predictions are altered by a function during the post-processing phase. The function is deduced from the performance of the black box model on the holdout dataset. This technique may be useful in adapting a pre-trained large language model to a dataset and task of interest.

These allow the reader to explore managing bias within their AI community. Although I applaud the guidance in this document, it highlights the bias found in the NIST AI RMF itself…

Language as a Gatekeeper

Language is a powerful construct. I remember trying to understand the chain rule for backpropagation (lots of jargon there). I was frustrated by the specific language mathematicians used when describing things that should be simple. My friend working on his Ph.D. in mathematics told me: “It’s just what we call it.” Or, when someone listens to financial news, they may wonder: “What’s a basis point?”. It’s 0.01%, or 0.0001 of a percent, according to A Complete Guide to Basis Points. The point is that using clear, simple language is essential for something that will alter the course of human existence. That’s the problem with the NIST AI RMF.

The NIST documents are not “light” reading. I had to focus diligently as I worked through each NIST AI RMF Framework control objective. I wanted to understand if I was deficient in some way or if this Framework was more challenging to read than the average document.

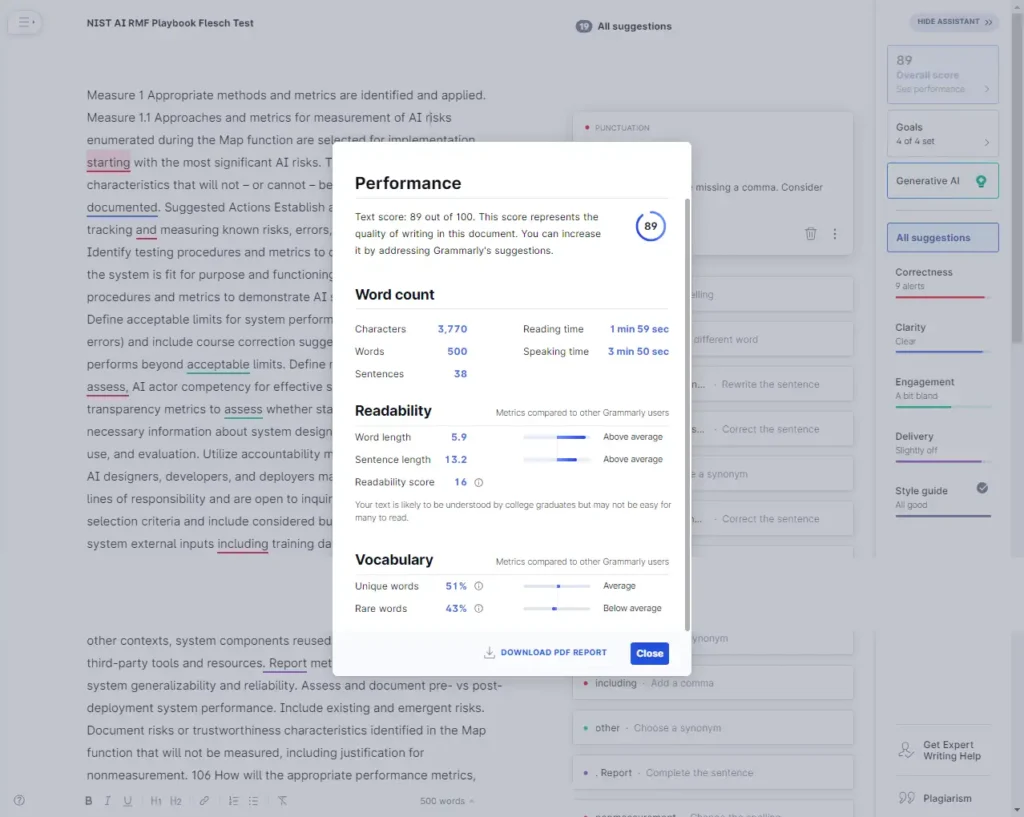

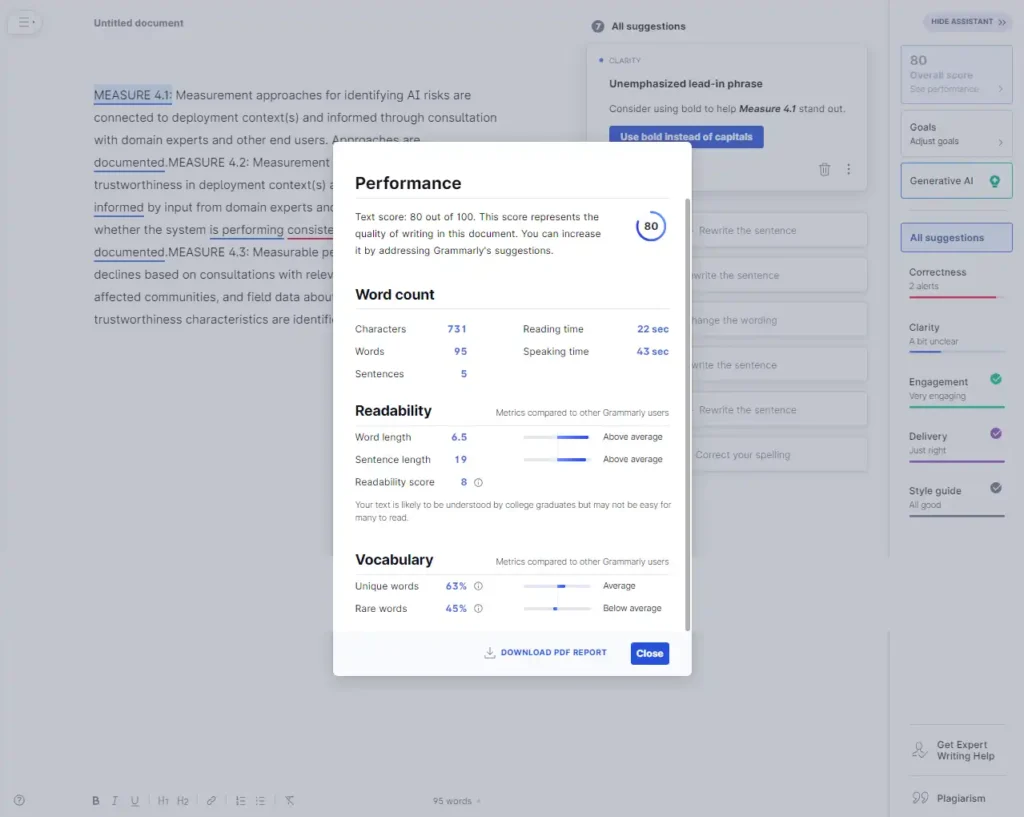

Enter the Flesch Reading Ease Score. For those unfamiliar with this score, it’s an academic measure of how difficult content is to read. I’ll save you the details; you can read them for yourself if you like, but the higher the score to 100, the easier the text is to read. The lower the score, the more complex the document is to read. For reference, “plain English” scores 60 – 70 on the Flesch scale. Where do you think the NIST AI RMF Playbook came in?

The NIST AI RMF Playbook came in at 16. This means that the “text is likely to be understood by a college graduate but may not be easy for many (college graduates) to read.” What about the control objective themselves in the NIST AI RMF? The reading score came back at an incredible 8, even more difficult.

Why is this a problem? NIST claims that AI is a pervasive technology with truly life-changing impacts. NIST repeatedly states that the process of AI development needs to be “inclusive” and that communities that are impacted by AI need to be part of it, but it behaves in a contradictory manner. How so?

According to one study by Gallop, Assessing the Economic Gains of Eradicating Illiteracy Nationally and Regionally in the United States, 54% of Americans between 16 and 74 read below a sixth-grade level. Bias is a significant topic of concern within the AI community, and one of the primary themes of the NIST AI RMF is that these processes are inclusive and account for the impacted communities.

The language the NIST AI RMF uses is a form of gatekeeping, making knowledge less accessible to the community. Consider subject matter experts who are needed for the AI process but who do not have formal education. How are the AI community members intended to consume this document? How can “impacted communities” have Trust in AI safeguards if the standards are not easily understood? They can’t…

What the NIST AI RMF Doesn’t Give You

The biggest problem with the NIST AI RMF is what it doesn’t give you. You can read all these pages of dense academic writing without knowing how a large language model works. How can you protect something when you don’t understand how it works? The documents provided so far do not provide a fundamental concept of how to implement AI governance in a real-life scenario, and there are no examples of AI attacks that your governance program should protect you from.

A Basic Education on AI Attacks

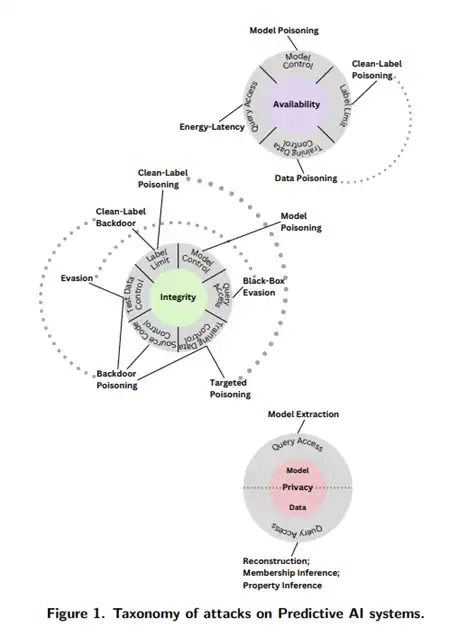

I’ve spent time in this article discussing why the NIST AI RMF misses the mark. However, NIST has provided fantastic documentation; I wish they got more press. To begin educating yourself on AI attacks, you need another NIST document (my favorite of the group): Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations. This taxonomy of attacks comes in at 107 pages and begins to expose us to the types of attacks that AI systems will deal with. Nowhere in the NIST RMF is the reader directed to this excellent resource. The adversarial taxonomy document focuses on the left side of the “audience” circle referenced at the beginning of this document. It provides the reader with at least a cursory education on their challenges as part of the AI development lifecycle.

The taxonomy covers high-level attacks and mitigation activities for predictive and generative AI systems. It breaks down the stages of AI learning and the attacks relevant to each stage. The attack taxonomy document is the first thing a technical resource should read when learning about AI security threats. These data points are critical to understanding so that individuals building governance models know what mistakes to try and mitigate issues.

Each item identified in this figure needs governance applied to mitigate human error or an adversary objective.

Where Should you Start if You’re Interested in AI Governance

After going through the NIST documents we’ve listed, the reader will need a more technical understanding of how AI mistakes are made in the AI life cycle and how attacks are carried out against AI systems. Readers will understand the various governance risks and how they should be managed. If you want to begin to understand how AI works to manage risks effectively, where could you start?

A Basic Education on Large Language Models (LLMs)

Some excellent resources are available online if you want to dive deeper into AI and AI governance. Suppose you’re willing to give 8 minutes of your time. In that case, IBM has a video on YouTube that provides a high-level overview of Generative AI: What are Generative AI models? If you’re willing to give another 22 minutes, Google has an Introduction to Generative AI on YouTube. If you’re willing to spend 60 more minutes, Andrej Karpathy has a video, Intro to Large Language Models, on YouTube.

Andrej Karpathy is one of the leading contributors to Tesla’s artificial intelligence program Autopilot and a former OpenAI researcher. Amazingly, the best people on this topic are providing free and open education.

After I’ve gone through these lessons, I would leverage a platform like replicate.com to see how Generative AI works behind the scenes. You can play with system prompts, temperature, top_p values, etc. Only once you’ve gone through these foundational educational sessions will I start consuming the documentation provided by NIST.

Conclusion

I’ve provided you with a lot of data to consider in this article. The intent was not to create a puff piece for clicks but to provide you with the complexities of developing a governance program for AI systems. As I stated at the beginning of this document, I’ve spent about 1000 hours on my AI journey. By that measure, I’m 1/10th of an expert. I hope I have piqued your interest enough that you read these additional documents and do not settle for surface knowledge. All that being said, how does it justify the statement?

I believe the NIST AI RMF will not help AI governance; it may make it worse.

Steven

Remember that note I told you to take in the section on explainability and interpretability? The statement was:

People prefer the least precise representation of data where they are still able to make a meaningful decision.

NIST

This works in humans because, as the research paper points out, people have developed intuition over years of experience. This is not possible for 99.99% of the population when it comes to AI. This puts how people prefer to make decisions, low precision backed by experience, in direct contradiction with the current state of AI: low education, low personal experience.

After talking to dozens of organizations and leaders who are starting their AI governance journey, the general approach is:

- Download the RMF,

- put the controls in a spreadsheet and

- document and enforce governance objectives.

The danger is that the NIST AI RMF does not come close to providing the reader with the education required to be successful. Nor does the NIST AI RMF inform the reader that there is a body of knowledge they must understand before attempting to implement the NIST AI RMF.

The NIST AI RMF is like junk food. It’s dense and has many “calories” in the form of complex words, but it doesn’t provide much nutritional value. You need all the additional documents we referenced in this article for that additional AI nutrition. Maybe I’m being too hard on the document; maybe it is more like steak. However, most individuals undertaking this AI governance program are prepared. Don’t let that be you!

| Document Title | Pages | URL |

| A Taxonomy of Trustworthiness of Artificial Intelligence | 78 | download |

| Psychological Foundations of Explainability and Interpretability in Artificial Intelligence | 56 | download |

| Towards a Standard for Identifying and Managing Bias in Artificial Intelligence | 86 | download |

| Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations | 107 | download |

| The AI RMF Playbook | 48 | download |

| The NIST AI RMF Framework | 210 | download |

Suppose you were to read the documents that amount to a basic understanding of how to govern an AI program according to NIST. You’re in for a 585-page read, plus 514 AI terms and definitions. There is a non-trivial likelihood that individuals tasked with AI governance will not find or read this additional documentation. AI Governance owners may need more education to make informed risk-based decisions. This situation can lead to people believing they are informed about AI risks when they are not.

Actual AI governance and security education are critical, but it shouldn’t take 500 pages to get the point across. There needs to be a simple, concise set of guidelines that organizations can read based on regulatory use cases.

As AI agents are deployed in critical environments, this lack of understanding can lead to catastrophic consequences. We mentioned earlier that AI systems are currently being designed to drive cars, make medical diagnoses, or run future nuclear fusion reactors. The consequences of overestimating one’s abilities involve life and death.

It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.

Mark Twain (maybe)

If you enjoy this content, let me know. It takes tremendous time and research to make this kind of content possible. If you’d like to see more content like this, let me know in the contact form below!